Combining Specialized Tools by Utilizing Derived Data

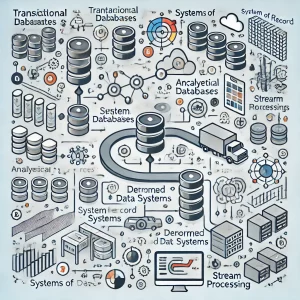

Today’s data systems increasingly require integrating specialized tools by utilizing derived data. This approach allows organizations to harness the strengths of different data storage and processing technologies, helping them meet a wide range of application needs effectively. By combining specialized tools, organizations avoid relying on a single system, which typically can’t handle all types of data processing. Instead, they adopt a modular approach, using multiple systems that excel in specific areas and ensuring a smooth data flow between them. This approach is key when designing applications around dataflow, enabling seamless integration and optimized performance.

Why Specialized Tools Are Essential

Modern applications need to handle large data volumes with various access patterns. For example, a web application may need to manage transactional data, perform complex analytical queries, and support full-text search capabilities. Different types of databases or data processing tools suit each of these needs:

-

Transactional Databases (OLTP): Optimized for short online transactions, these systems ensure data integrity through ACID (Atomicity, Consistency, Isolation, Durability) properties, making them ideal where data accuracy is critical.

-

Analytical Databases (OLAP): Designed for complex queries and reporting, OLAP systems aggregate and analyze large datasets, often used in business intelligence.

-

Search Engines: Search tools like Elasticsearch and Solr provide powerful full-text search capabilities, allowing complex queries over unstructured data.

-

Data Warehouses: Consolidating data from various sources, these systems handle large volumes and are read-heavy, ideal for analysis and reporting.

-

Stream Processing Systems: Technologies like Apache Kafka and Apache Flink process real-time data, enabling applications to react instantly to incoming data.

By combining these specialized tools, organizations can build a robust data architecture to handle their diverse application needs effectively.

What Is Derived Data?

Derived data is created from existing data through transformations or processing steps. This concept is essential in integrating multiple data systems, as it enables consistent and coherent data across various representations.

Systems of Record vs. Derived Data Systems

Data systems typically fall into two categories:

-

Systems of Record: These systems hold the original, unaltered data and maintain data integrity. For example, a relational database that stores user information serves as a system of record.

-

Derived Data Systems: Derived systems generate new data from transformations applied to original data. A cache holding frequently accessed data or a search index for fast lookups are examples. If derived data is lost, it can be regenerated from the original source.

Understanding these distinctions clarifies data flows and helps manage dependencies across components.

Challenges in Data Integration: Using Specialized Tools with Derived Data

As organizations adopt specialized tools, integrating these systems can become challenging. Key issues include:

-

Data Synchronization: Keeping data consistent across systems with different update frequencies and models can be complex.

-

Data Transformation: Data often requires transformation to fit a target system’s schema, which can introduce latency and complexity.

-

Error Handling: Errors in multi-system data flows need robust handling to maintain data integrity.

-

Performance Optimization: Each system has distinct performance characteristics, making it challenging to optimize data flow efficiently.

-

Monitoring and Observability: Maintaining system health and performance requires a clear understanding of how data flows through the system.

Analyzing Dataflows: Using Specialized Tools with Derived Data

To integrate multiple data systems effectively, organizations must understand their dataflows thoroughly. Key considerations include:

-

Data Writing Order: Writing data in the correct order is crucial. For instance, data should first be recorded in the system of record before propagating to derived systems like search indexes.

-

Change Data Capture (CDC): CDC helps track changes in the system of record, propagating updates consistently to derived systems.

-

Event Sourcing: This approach records all changes as a sequence of events, allowing systems to recreate the current state from past events.

-

Idempotence: Ensuring that operations yield the same result even when applied multiple times is critical for consistency, especially in distributed systems.

Strategies for Combining Specialized Tools

Combining tools through derived data can be achieved with several approaches:

-

Data Pipelines: Data pipelines automate data flow between systems. Tools like Apache NiFi or Apache Airflow handle data transformation and loading efficiently.

-

Microservices Architecture: Microservices allow teams to build independent services interacting with different data systems. Each service can handle specific data transformations, promoting modularity and scalability.

-

APIs and Webhooks: APIs and webhooks enable real-time communication between systems, ensuring derived data stays up to date.

-

Batch and Stream Processing: Combining batch and stream processing allows handling both historical and real-time data, where batch jobs process historical data and stream processing manages real-time updates.

-

Federated Query Interfaces: Federated query interfaces allow users to query across multiple systems without needing to know the underlying sources, simplifying data access.

The Future of Data Systems

As data systems evolve, integrating specialized tools with derived data will likely accelerate. Emerging trends include:

-

Increased Cloud Service Adoption: Cloud data services offer scalability and flexibility, enabling easy integration of multiple tools without the overhead of on-premises management.

-

Data Mesh Architectures: Data mesh promotes decentralized data ownership and self-serve infrastructure, making data systems more agile and responsive.

-

Advances in Data Integration Technologies: Newer integration tools offer more robust options for managing data flows, transformations, and synchronization.

-

Focus on Real-Time Data Processing: Growing demand for real-time insights will push organizations to adopt more stream processing solutions for quick, actionable insights.

-

Emphasis on Data Governance and Compliance: With stricter data privacy regulations, organizations will need strong data governance frameworks to ensure compliance.

Conclusion

Combining specialized tools with derived data is essential for modern data architectures. By distinguishing between systems of record and derived data systems, organizations can integrate multiple technologies to meet diverse application needs. As data systems evolve, focusing on modularity, automation, and real-time processing will shape the future of data integration, enabling organizations to gain maximum value from their data. Organizations embracing these principles will be better prepared for the complexities of data management and for driving innovation through data-driven insights.

Do you like to read more educational content? Read our blogs at Cloudastra Technologies or contact us for business enquiry at Cloudastra Contact Us.