Most enterprises don’t trip on models. They trip on everything wrapped around them, data access, governance handoffs, security sign-offs, who owns day-two operations (and cost). An AI readiness assessment exists to de-risk that messy first mile. A good partner translates ai automation services into a realistic plan: what you can ship now, what becomes feasible after two or three targeted fixes, and what to park for later.

Short version: map business value to technical reality before you spin up pilots that can’t reach production and deliver measurable impact.

What The Assessment Actually Covers

1. Business Alignment & Use-Case Fit – tie automation to P&L levers and measurable KPIs; score value vs. complexity; decide where ai app development services beat off-the-shelf.

2. Data Foundations – lineage, quality SLAs, domain ownership, and PII handling; retrieval patterns for LLMs (RAG), chunking, vectorization, and cache policy.

3. Platform & Infrastructure – GPU/CPU strategy, inference endpoints, latency budgets; observability and FinOps guardrails; fit with any existing ai development service.

4. Security, Privacy & Compliance – prompt-injection mitigations, DLP/KMS, secret rotation, model-risk management.

5. Model & MLOps Lifecycle – versioning, feature stores, eval harnesses, drift detection, rollback; human-in-the-loop where error costs are high.

6. Governance & Policy – RACI for approvals, model cards, acceptable-use policy, audit trails you can actually follow.

7. People & Change – skills inventory, training path, CoE structure, vendor management for advanced AI services.

The point isn’t a pretty scorecard. It’s clarity on “what must move from level 2 → 3” so ai automation services can run reliably, not just on demo day.

A Pragmatic Maturity Model

0 Ad-hoc: shadow AI, no policy; CSVs on a laptop.

1 Aware: draft rules, one pilot, scattered pipelines.

2 Repeatable: named owners, baseline evals, partial monitoring.

3 Managed: MLOps baseline, RAG in prod, cost tracking.

4 Optimized: automated evals, drift alerts, budget caps.

5 Transforming: portfolio run like products; measurable enterprise impact.

Scores matter less than delta near revenue-critical use cases. Move the blockers first. Then scale.

Tangible Outputs A Real Partner Hands You

– AI strategy map linking ai automation services to KPIs (cycle time, AHT, FCR, NPS).

– Use-case portfolio (value × feasibility) with a 6–12 week pilot slate.

– Reference architecture for data + RAG + model gateways + monitoring.

– Security & governance pack: model cards, approvals, audit trails.

– Operating model: CoE charter, RACI, skills plan, and a vendor plan for ai app development services and any managed ai development service.

– Value & risk tracker with baselines and go/no-go criteria.

Quick Composite Examples

1. Insurer (claims): fragmented docs; a four-week OCR + entity normalization pass let an LLM triage queue cut manual touches ~22%.

2. Manufacturer (service ops): no prompt governance; adding guardrails + red-teaming dropped harmful outputs by ~93% pre-go-live.

3. Retail (catalog ops): GPU overspend; moving batch jobs to CPU inference saved ~38% with negligible latency hit.

Small, targeted fixes. Outsize gains. Classic first-mile wins for ai automation services once governance and data behave.

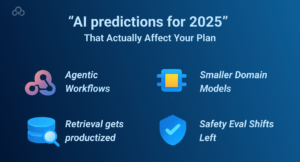

“AI predictions for 2025” That Actually Affect Your Plan

1. Agentic workflows will chain tools, not just prompts, so sandboxing and approval gates matter more than ever.

2. Retrieval gets productized: managed RAG + hybrid search will be normal; data contracts and TTL policies stop silent drift.

3. Smaller, domain-specific models win where privacy and latency rule; evaluate edge inference paths early.

4. Safety eval shifts left: prompt tests in CI (not just code), with automated red-teaming and rollback ready.

These aren’t crystal-ball takes, they reprioritize your backlog and where advanced AI services deliver the most leverage.

Cost Hotspots You’ll Almost Always Find

Use this table as a triage checklist when you operationalize ai automation services and ai app development services.

|

Area |

Early indicator |

Why it spikes |

|

Uncached retrieval |

QPS climbs, vector store bill jumps |

No retrieval cache or poor chunking |

|

Unbounded context |

Tokens per request creep |

Prompts/templates ignore budget limits |

|

Over-provisioned GPUs |

Low utilization graphs |

Batch jobs parked on premium nodes |

|

Human review |

Backlog keeps growing |

No triage policy; everything escalates |

How Consultants De-Risk Adoption (In Practice)

Instrument everything (prompts, model versions, cost, latency, outcomes). Keep blast radius small with canaries and strong rollbacks. Bake evaluation into CI. Treat AI like software, same SDLC discipline, just with model-specific guardrails. Sounds obvious. It’s also where platforms either become boringly reliable…or astonishingly noisy.

Readiness Recap: Priorities, Risks, and Wins

An AI readiness assessment isn’t paperwork, it’s a decision engine. Score the foundations (data, platform, security, governance, people), then push ai automation services where the math is already good. Standardize evaluation for accuracy and safety. Centralize observability (latency, cost, drift). Publish ownership, who approves, who rolls back, who pays. Do those three and your ai development service budget stops feeling speculative.

Stay sharp on spend. The Cost Hotspots table is intentionally blunt: retrieval caching, context budgets, right-sized compute, and a simple review triage policy eliminate most overruns before they balloon. This is exactly where advanced AI services pay back, by locking in patterns that control both reliability and unit economics as you scale.

And for ai predictions for 2025? Agentic flows, managed retrieval, smaller domain models, none of that changes the fundamentals. It raises the bar for governance and evaluation. If your assessment ends with (1) a prioritized use-case portfolio, (2) enforceable policy, and (3) an instrumented platform from day one, you’re ready to move from pilots to production. That’s when ai app development services stop being experiments and start becoming throughput.

Technical FAQs

Vendor suite or custom build, how do we decide?

Start with constraints: data sensitivity, latency SLOs, and compliance. If PII can’t leave your VPC or the SLO is sub-200 ms, you’ll lean custom or private endpoints; otherwise, managed ai app development services can cut time-to-value. Run a two-week bake-off on your eval set and score accuracy, p95 latency, cost/1K tokens, and lock-in risk.

What’s the “minimum viable” MLOps for generative AI?

Version prompts and models, store eval runs, trace every request, and enable one-click rollback. Add drift checks and cost budgets. That’s the floor, not the ceiling. Also track p95/p99 latency and token spend per route so ai automation services don’t surprise FinOps.

How do we prevent leaks of sensitive data?

Policy plus plumbing: input/output redaction, allow-listed tools, per-tenant encryption, retrieval scoped to approved corpora. Default-deny outbound calls; open least-privilege paths when justified. Add periodic red-teaming and short log-retention windows for extra safety.

How should we measure ROI for ai automation services?

Track workflow outcomes (cycle/handle time, FCR, accuracy, CSAT, unit cost). Include cost-to-maintain in TCO (tokens, storage, hosting, human review). No “phantom savings”, only measured ones. Baseline before/after and attribute wins to a specific ai development service or change.

What belongs in an AI acceptable-use policy?

Approved providers, PII rules, model cards, escalation paths, logging expectations. Keep it short and enforceable; review quarterly. Put it where engineers live (repo/Confluence) and require policy checks on PRs touching prompts.

Which models do we standardize on?

Choose a “default” per class (general LLM, code, vision) based on your eval set accuracy, latency SLOs, and cost/1K tokens, plus hosting/licensing options. Leave an escape hatch for niche tasks. Publish a deprecation path so upgrades don’t break ai app development services downstream.

Do we really need a Center of Excellence (CoE)?

A lightweight CoE curates patterns, eval sets, and guardrails, and manages vendor relationships for advanced AI services, so five teams don’t solve the same problem five ways. Think two-pizza team with office hours and a living pattern library.

Where do data contracts fit?

They stabilize quality. Contracts lock schema/SLAs/lineage so your retrieval layer stays reliable even when upstream systems change. Enforce with CI schema checks and versioned contracts to stay future-proof as ai predictions for 2025 materialize.

Do you like to read more educational content? Read our blogs at Cloudastra Technologies or contact us for business enquiry at Cloudastra Contact Us.