Introduction

Demos impress. Production pays. The gap between the two is a repeatable lifecycle, clear goals, clean data, measured models, and a deployment path that won’t freak out security or finance. A seasoned ai managed service provider keeps that loop tight so your AI modernization doesn’t stall and your legacy system upgrade doesn’t break reporting on Monday morning. Think of it as guardrails plus speed, not either/or. Ai consulting services help set the rules; an ai development service team ships the thing you can actually run.

Frame the problem and pick real metrics

State the decision, the latency you need (batch, near-real-time, or real-time), and what a mistake costs. Then pick a tiny set of KPIs you’ll live with:

- Task success rate (or win-rate)

- Precision/recall (or F1) for classification

- P95 latency

- Cost per request

- Human override/escalation rate

If a model can’t clear these in tests, it doesn’t go live. If it slips in prod, it rolls back. Simple rule, saves drama. An ai managed service provider will wire these gates into your process so it’s not “someone’s opinion” later.

Get the data house in order

Inventory your sources: databases, logs, documents, tickets, vendor feeds. Add the unsexy pieces: lineage, consent, PII tags, retention rules. For AI modernization, move from brittle exports to a change-data-capture (CDC) stream into a governed lake/lakehouse. For a legacy system upgrade, start with a thin ETL bridge so you can ship without breaking upstream reports.

Labeling? Use a mix: a gold set with human review, weak supervision for scale, and a couple of programmatic checks to keep labels steady. Your ai managed service provider should also push for producer SLAs (freshness, schema stability). Small effort, big stability.

Mini case: A regional insurer replaced nightly CRM CSVs with CDC and basic text cleanup. Training quality rose ~28%; manual triage fell ~19%, before any model tune-ups.

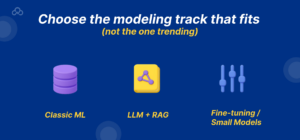

Choose the modeling track that fits (not the one trending)

You’ve got three sane paths:

-

Classic ML for stable, explainable tasks (risk scores, routing, classification). Needs a feature pipeline, imbalance handling, cross-validation, and a model registry.

-

LLM + RAG when the knowledge changes and answers must be grounded in your own content. The craft lives in chunking, embedding choice, retrieval quality, and guardrails.

-

Fine-tuning / small models when your domain language is steady, latency targets are tight, or unit cost at scale rules the budget.

Start light (prompting or RAG), prove value, then fine-tune if latency/cost math says so. A good ai development service will document the crossover with evaluations and a quick cost curve, not just vibes.

Evaluation and risk: “no evals, no promotion”

Build an offline harness with a golden set and scenario suites. Track accuracy, bias/robustness probes, refusal behavior, and jailbreak resistance. Then test online with canaries and A/B, plus human-in-the-loop for high-risk paths. Add policy checks (PII suppression, leakage detection) and prompt-injection defenses. This is where ai consulting services and engineering meet: what’s “safe enough” is agreed before launch day. A mature ai managed service provider automates the scorecards and pass/fail gates so decisions are fast and auditable.

MLOps/LLMOps: ship on repeat, not by heroics

Production means the same result tomorrow as today. Put guardrails in the pipeline, not just in Slack:

-

Versioned releases for models and prompts with approvals and instant rollback.

-

Registries & stores your auditors can follow (model registry; feature store or vector DB with lineage).

-

Serving that fits the job: batch, online, or streaming; an LLM router for multi-provider fallback; semantic caching to cut spend.

-

Observability that matters: traces, P95 latency, refusal/hallucination rate, drift alerts, cost/request, on one dashboard.

-

SRE basics: SLOs, autoscale, rate/budget caps, DR plan, artifact signing.

In brownfield AI modernization, use a strangler-fig move: drop in a sidecar inference service, send 5–10% of traffic, watch the graphs, then expand. A mature ai managed service provider keeps security consistent across paths (VPC peering, KMS, policy-as-code) so you gain speed without leaking risk.

Cost control without hand-waving (FinOps in practice)

Run AI like you pay the bill (because you do). Levers that actually move numbers:

-

Raise semantic cache hit rate (20–60% savings is common).

-

Mix providers via a router; don’t overpay for every task.

-

Use smaller or quantized models when quality allows.

-

Add prompt/token budgets; cut wasteful verbosity.

-

Batch where latency allows; keep SLAs honest.

Table (reference): Cost levers vs impact

|

Lever |

Cost Impact |

Trade-off |

|

Semantic cache (+20–60% hits) |

High |

Slight staleness risk |

|

Smaller/quantized model |

Med–High |

Possible quality drop |

|

Provider mix/routing |

Medium |

Integration complexity |

|

Token budgets/caps |

Medium |

Occasional truncation |

|

Batch windows |

Medium |

Higher latency tolerance |

A competent ai managed service provider will publish a FinOps dashboard: cost per request, cache hit rate, provider share, and a forecast you can defend in a meeting.

Technical FAQs

RAG or fine-tuning?

If knowledge changes and you need citations, start with RAG. When language stabilizes and latency or cost bites, add a small fine-tune. An ai managed service provider should prove the crossover with evals and cost curves.

How do we secure LLMs behind our VPC?

PII-aware logging, policy-as-code on inputs and outputs, KMS-backed secrets, private endpoints or on-premises serving, and VPC peering. Include tool allowlists and prompt-injection defenses. Standard procedure for updating legacy systems with regulated data and modernizing AI.

How do we catch model or data drift early?

Watch input distributions, output quality, cache hit rate, and task success. When thresholds breach, trigger re-indexing, retraining, or a router change. Your ai managed service provider should automate both alerts and playbooks.

What’s “minimum viable observability” for LLM apps?

Traces, P95 latency, refusal/hallucination rates, cost/request, plus a nightly golden-set run. An ai development service can set this up fast; ai consulting services help set thresholds and escalation paths.

How does Build-Operate-Transfer actually work?

Phase 1 proves value with a thin slice. Then Phase 2 runs with SLOs, budget caps, and audits. Then Phase 3 hands over with IaC, runbooks, training, and quarterly checkpoints. A responsible ai managed service provider leaves you with the keys, the logs, and the eval harness.

Ship Small, Measure Hard, Scale What Works

Treat the lifecycle as a loop: frame → data → model → evaluate → deploy → observe → repeat. Start with one thin slice, prove value in daylight, harden the controls, and only then scale. If you’re juggling AI modernization alongside a legacy system upgrade, lean on an ai managed service provider to align ai consulting services (governance and KPIs) with an ai development service (build and run). Keep the metrics visible, the costs capped, and the rollback one click away, that’s how AI stops being a lab toy and starts paying for itself.

Then make it routine: hold a monthly “keep, kill, or fix” review against SLOs; retire anything that can’t meet task success or cost targets; and keep portability real with an LLM/router plus evals so switching providers isn’t chaos. Practice failover once a quarter, budget for drift work (re-indexing or retraining), and refresh your golden sets so quality doesn’t quietly slide. When the numbers say a deeper build will lower latency or unit cost, go custom, otherwise stay hybrid and ship more slices. This steady cadence is what a good ai managed service provider will leave behind: a simple loop that your team can run without heroics.

Do you like to read more educational content? Read our blogs at Cloudastra Technologies or contact us for business enquiry at Cloudastra Contact Us.