Introduction

Clinicians don’t struggle because there’s too little data. They struggle because it’s scattered, stale, and contradictory. Different EHRs tell different stories; lab codes don’t line up; imaging sits in a silo. AI in medicine can’t help if inputs are a mess. MedAI’s bet is simple: fix integration first, then let clinical AI and AI in medicine do its job, faster decisions, safer handoffs, real healthcare efficiency.

Why data chaos persists

-

Heterogeneity by design. Hospitals inherit HL7v2 feeds, DICOM archives, and a patchwork of FHIR APIs that represent the same concept five ways.

-

Identity drift. One patient, three MRNs, two name spellings. EMPI tasks never quite finish.

-

Timing gaps. Results arrive late; device streams drop packets; ADT events don’t reconcile with orders.

-

Governance debt. Policies exist but aren’t codified; lineage is tribal knowledge.

The outcome? AI in healthcare models operate on partial truths. Alerts fire late. Duplicated tests creep up. Clinicians retype the same history again. Waste hides in the seams.

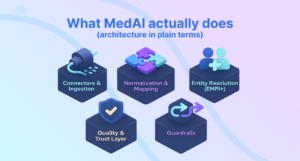

What MedAI actually does (architecture in plain terms)

MedAI sits between sources and everything downstream, care teams, analytics, and clinical AI services.

-

Connectors & ingestion. HL7v2 (ADT/ORM/ORU), FHIR (R4/R5), DICOM, device telemetry, claims/eligibility, pharmacy. MedAI uses CDC where available to maintain near-real-time feeds.

-

Normalization & mapping. Concepts are harmonized to SNOMED CT, LOINC, RxNorm; units standardized; observation panels decomposed; imaging metadata fixed.

-

Entity resolution (EMPI+). Probabilistic and rule-based matching reconcile patients, providers, encounters. Deterministic links are pinned; ambiguous merges are queued for review.

-

Quality & trust layer. Freshness SLAs, completeness checks, dedupe, constraint validation (e.g., biologically impossible vitals), and confidence scores shipped with each record.

-

Access & orchestration. Streaming topics for real-time AI in medicine, lakehouse tables for analytics, and stable FHIR APIs for apps.

-

Guardrails. Role-based access, audit, PHI policies (de-identification for research), lineage from source message to normalized record.

Integration layers, failure modes, and controls

MedAI Data Integration Map , From Chaos to Usable Signals

| Layer | What MedAI handles | Key standards | Typical failure modes | Controls & KPIs (healthcare efficiency) |

| Ingestion | HL7v2, FHIR, DICOM, devices, pharmacy, claims | HL7v2, FHIR R4/R5, DICOM, ASTM | Dropped messages, out-of-order events | End-to-end ACKs; replay queues; Freshness P95; message loss <0.1% |

| Normalization | Code systems, units, panel expansion | SNOMED, LOINC, RxNorm, UCUM | Code drift, unit mismatches | Auto-mapping + manual adjudication; Mapping accuracy >98%; unit mismatch rate |

| Entity resolution (EMPI) | Patient/provider/encounter linking | Custom + IHE PIX/PDQ | False merges/splits | Thresholds + human review; duplicate rate ↓; merge error rate |

| Quality & trust | Completeness, plausibility, dedupe | Business rules + stats | Stale or impossible values | Freshness SLA, completeness %, anomaly hits |

| Access & orchestration | Streams, lakehouse, APIs | Kafka, Parquet/Delta, FHIR | Ad-hoc “shadow” data marts | Golden topics/tables; lineage coverage %, API uptime |

When these numbers move the right way, AI in healthcare becomes reliable instead of brittle.

Real-time clinical use cases (that work today)

-

Cross-venue medication reconciliation. MedAI merges pharmacy claims with inpatient MAR and ambulatory med lists. A copilot in the chart presents a unified, deduped list with discrepancies flagged. Result: fewer omissions, fewer calls to the pharmacy. AI in medicine, grounded.

-

Sepsis early signals. Streaming vitals + labs + ADT produce a consistent feature window for a triage model. Alerts fire with provenance and confidence scores; nurses can drill back to the raw measurements.

-

Imaging at the point of care. A DICOM broker plus metadata fixes let ED physicians fetch prior studies from a different facility inside the viewer. Duplicate CTs drop.

-

Pre-op optimization. Normalized labs and problem lists feed a surgical risk score; the workflow orders missing tests and consults automatically, clinical AI as a checklist with teeth.

-

Care coordination. Clean discharge summaries, reconciled meds, and FHIR tasks move to community PCPs through a standards-first handoff.

After oncology data harmonization, “time to first complete tumor board packet” dropped from days to hours. The model didn’t get smarter. The inputs did.

Security, privacy, and regulatory posture

-

Access control: least-privilege roles; emergency break-glass with audit.

-

PHI scope: de-identification for research (expert determination or Safe Harbor); Limited Data Sets with DUAs.

-

Lineage & explainability: every recommendation in AI in healthcare references the normalized record and original source message.

-

Change control: versioned mappings, pinned terminologies, staged rollouts with rollback plans.

-

Ops hygiene: immutable logs, tamper-evident stores, regular red-team drills. Compliance is not paperwork; it’s muscle memory.

KPIs & ROI you can show a CFO

Operational KPIs

-

Data freshness P95 (min); completeness per domain; duplicate order rate; prior-study retrieval rate; chart prep time.

-

Trust metrics: mapping accuracy; EMPI duplicate rate; lineage coverage.

Clinical & financial signals

-

Avoided duplicate imaging/tests.

-

Earlier interventions (e.g., sepsis bundle initiation times).

-

Documentation completeness; denied claims due to coding mismatches.

-

Clinician time shifted from hunting to deciding.

Go-Live Readiness & Safety Gates

Clear these gates before turning on any downstream AI in healthcare use case:

-

Data trust ≥ goal: EMPI duplicate rate ≤ 0.5%, mapping accuracy ≥ 98% (LOINC/RxNorm/SNOMED), freshness P95 ≤ 15 min (labs/devices).

-

Live lineage and audit: complete lineage from source message to normalized record to clinical AI feature store, complete with unchangeable logs.

-

Identity stability: rollback for bad merges tested; manual review queue < 48-hour backlog; auto-link threshold adjusted.

-

Versioned mappings and terminologies; staged updates (shadow → limited → general) with one-click rollback are examples of change control pinned.

-

Safety owners have been identified, operations and clinical stewards have been assigned, an on-call schedule has been established, and weekly drift and incident reviews have been planned.

-

Fail-open behavior: Systems gracefully degrade (hide suggestions, keep raw data visible, alert owners) if a feed degrades.

Ops Runbooks for High-Noise Domains

Laboratories. Expand panels, establish biologic plausibility, and normalize units (UCUM). Freeze new maps and go back to the last good if unit mismatch spikes.

Medications. Reconcile claims, MAR, and ambulatory lists; use RxNorm’s ingredients and strengths to collapse duplicates; and alert the chart copilot to any conflicts in AI in healthcare and healthcare efficiency.

Picture. Fix surface provenance in the viewer, broker priors across sites, and DICOM metadata (Accession/StudyInstanceUID); if prior-fetch fails, initiate a manual query before placing another order.

Gadgets. To ensure that clinical AI and AI in medicine features remain truthful, de-spike streams, align time zones, and maintain “observed” vs. “received” timestamps.

Technical FAQs

How does MedAI help clinical AI models generalize across sites?

By standardizing inputs (SNOMED/LOINC/RxNorm), aligning units, and stabilizing identity links before modeling. Models trained on normalized features see less spurious variance and more signal, AI in healthcare that travels.

HL7v2 vs FHIR vs DICOM, what goes where?

HL7v2 still dominates for events (ADT/ORM/ORU). FHIR is the contract for modern APIs and app integration. DICOM carries images and rich metadata. MedAI ingests all three, then exposes FHIR for apps and curated streams/lakehouse tables for clinical AI and analytics.

How do you prevent dangerous merges in EMPI?

Dual thresholds (auto-link, manual review) with blocking keys, referential constraints, and rollbacks for bad merges. KPIs include duplicate rate and merge error rate. Patient safety beats over-eager linking every time.

Can we trust timestamps for real-time AI in medicine?

Only if you verify them. MedAI reconciles device clocks, normalizes time zones, and preserves both “observed” and “received” times. Freshness SLAs are enforced at the topic/table level so AI in diagnostics isn’t reasoning on stale data.

How does MedAI handle terminology drift (new LOINC codes, etc.)?

Version-pinned catalogs, backward-compatible mappings, and change advisories. New terms go through a shadow period with monitoring. If mapping confidence dips, alerts route to data stewards.

What’s the safest path to production?

Silent mode first, then limited enablement. Every feature ships with lineage, confidence, and an owner. Weekly reviews check alert burden, subgroup behavior, and data quality drift. Rollback remains a one-click reality.

Clinical Data Chaos

Great clinical AI starts with great plumbing. MedAI turns fragmented feeds into trustworthy, timely signals, so AI in medicine can inform decisions, not just dashboards. Clean inputs. Clear lineage. Calm rollouts. That’s how AI in healthcare delivers real healthcare efficiency where it counts: the moment a clinician has to decide.

Do you like to read more educational content? Read our blogs at Cloudastra Technologies or contact us for business enquiry at Cloudastra Contact Us.